In a hurry? Download link for the program is here. Edit config_template.txt for directions on setup, it’s kind of tricky. Only runs on Windows right now. (Github source)

Why I wanted a “translate anything on the screen” button

I’m a retro gaming nut. I love consuming books, blogs, and podcasts about gaming history. The cherry on top is being able to experience the identical game, bit for bit, on original hardware. It’s like time traveling to the 80s.

Living in Japan means it’s quite hard to get my hands on certain things (good luck finding a local Speccy or Apple IIe for sale) but easy and cheap to score retro Japanese games.

Yahoo Auction is kind of the ebay of Japan. There are great deals around if you know how to search for ’em. I get a kick out of going through old random games, I have boxes and boxes of them. It’s a horrible hobby for someone living in a tiny apartment.

There is one obvious problem, however

It’s all in Japanese. Despite living here over fifteen years, my Japanese reading skills are not great. (don’t judge me!) I messed around with using Google Translate on my phone to help out, but that’s annoying and slow to try to use for games.

Why isn’t there a Google Translate for the PC?!

I tried a couple utilities out there that might have worked for at least emulator content on the desktop, but they all had problems. Font issues, weak OCR, and nothing built to work on an agnostic HDMI signal so I could do live translation while playing on real game consoles.

So I wrote something to do the job called UGT (Universal Game Translator) – you can download it near the bottom of this post if you want to try it.

UGT 0.62 released – now includes a hotkey for drag-rect selection and support for rendering Hindi https://t.co/0nIdLVmQ1B pic.twitter.com/6DdZfBAS87

— Seth A. Robinson (@rtsoft) April 12, 2020

Here’s what it does:

- Snaps a picture from the screen or HDMI signal, sends it to google to be analyzed for text in any language

- Studies the layout and decides which text is dialog and which bits should be translated “line by line”

- Overlays the frozen frame and translations over the gameplay

- Allows copy/pasting the translated or original language or looking up a kanji by clicking on it

- Can translate any language to any language without needing any local data as Google is doing all the work, can handle rendering Japanese, Chinese, Korean, etc. Different fonts are automatically used for different languages (configuration in font.txt)

- Controlled by hotkeys or a gamepad

- Can read the dialog out loud in either the original or translated language

- Can drag a rectangle to only translate a small area (Ctrl-F10 by default)

In the video above, you’ll notice some translated text is white and some is green. The green text means it is being treated as “dialog” using its weighting system to decide what is/isn’t dialog.

If a section isn’t determined to be dialog, “Line by line” is used. For example, options on a menu shouldn’t be translated all together (Run Attack Use Item), but little pieces separately like “Run”, “Attack”, “Use item” and overlaid exactly over the original positions. If translated as dialog, it would look and read very badly.

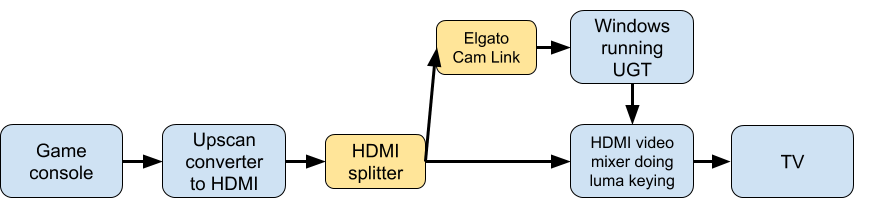

Here are how my physical cables/boxes are setup for “camera mode”. (Not required, desktop mode doesn’t need any of this, but I’ll talk about that later)

Happy with how merging two video signals worked with a Roland V-02HD on the PlayStep project, I used a similar method here too. I’m doing luma keying instead of chroma as I can’t really avoid green here. I modify the captured image slightly so the luma is high enough to not be transparent in the overlay. (of course the non-modified version is sent to Google)

This setup uses the windows camera interface to pull HDMI video (using Escapi by Jari Komppa) to create screenshots that it sends to Google. I’m using an Elgato Cam Link for the HDMI input.

Anyway, for 99.99999999% of people this is setup is overkill as they are probably just using an emulator on the same computer, which is why the default is “desktop mode” that just lets you use hotkeys (default is Ctrl-F12) to translate the active Window. It’s just like having Google Translate on your PC. You can also use Ctrl-F10 to drag a rectangle to translate, and using Ctrl-F12 after that will remember that rect position/size.

Here’s desktop mode in action, translating a JRPG being played on a PC Engine/TurboGrafx 16 via emulation. It shows how you can copy/paste the recognized text if want as well, useful for kanji study, or getting text read to you. You can click a kanji in the game to look it up as well.

Try it yourself

Before you download:

- Machine translation is not great (but getting better) – this is no way replaces the work of real translators

- You need to rename config_template.txt to config.txt and edit it

- You need to enter your Google Vision API key in the config.txt. This is a hassle but it’s how Google stops people from abusing their service

- Also, you’ll need to enable the Translation API

- Google charges money for using their services after you hit a certain limit. I’ve never actually had to pay anything, but be careful.

- This is not polished software and should be considered experimental meant for computer savvy users

- Privacy warning: Every time you translate you’re sending the image to google to analyze. This also could mean a lot of bandwidth is used, depending how many times you click the translate button. Ctrl-12 sends the active window only, Ctrl-11 translates your entire desktop.

- I got bad results with older consoles (NES, Sega Master System, SNES, Genesis), especially games that are only hiragana and no kanji. PC Engine, Saturn, Dreamcast, Neo-Geo, Playstation, etc worked better as they have sharper fonts with full kanji usually.

- Some game fonts work better than others

- The config.txt has a lot of options, each one is documented inside that file

- I’m hopeful that the OCR and translations will improve on Google’s end over time, the nice thing about this setup is the app doesn’t need to be updated to take advantage of those improvements or even additional languages that are later supported

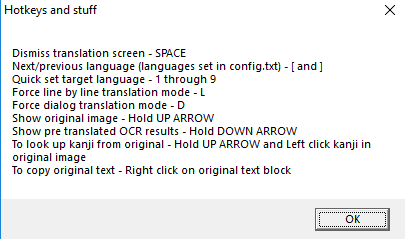

After a translation is being displayed, you can hit ? to show additional options. Also, this is outdated, use the real app to see the latest.

Misc videos of features

Released Universal Game Translator V0.64 : now by default the right joystick button will trigger translations of the active window while nicely sharing the gamepad, don't have to touch the keyboard at all. Here's me playing a PS1 game via Retroarch. https://t.co/0nIdLVEqT9 pic.twitter.com/dCoolo8AoP

— Seth A. Robinson (@rtsoft) December 2, 2020

Added a "Push E to export to HTML" feature in UGT 0.65, helps me with Japanese study because now I can use the excellent chrome extension rikaichan/rikaikun on game dialog. Here's a crappily made video using FFXIII (PS3) as an example pic.twitter.com/e4gMNcTMef

— Seth A. Robinson (@rtsoft) February 5, 2021

Latest changes

10/31/2021 – V0.72 Beta

This release is bugfixes and new features to support using UGT in tandem with other translation software that work with clipboard data.

- FEAT: Can now disable translations completely (useful if you only want the OCR features) – set the active language to “00”. Config text file has an example showing how to do it

- FEAT: Added “Invisible mode (no overlay)” checkbox to the in-app options, if checked, UGT won’t actually overlay anything over the game. (it will look like it’s not doing anything, but as long as you’ve selected to put the text on the clipboard in the config.txt, this should work for people who are using another app to view the pre or post translations)

- BUGFIX: Export to html handles line-by-line correctly

- When copying things to clipboard, no longer includes the extra whitespace or last CR. Hm, or did we want the last CR? Oh well, let’s try this (jotumn)

- BUGFIX: Fixed freeze/crash that happened when Google processes text as upside-down (Meerkov)

- Added deepl_api_url config.txt setting, allows you to switch to the paid URL if needed. Previously it just would give a wrong URL error if you used the paid version, oops. (RandomTemere)

- Pre-translated text blocks are now colored similar to post-translated text (ie, green for dialog), was kind of confusing that they weren’t before.

11/01/2021 – V0.73 Beta

- BUGFIX: UGT screen shouldn’t be able to end up off the screen in an unusable position. Sometimes it takes two clicks to fix so it’s still slightly sketch though (Cloudstrifeff7)

- Now adding spaces in “dialog” mode text at a CR even when Google thinks it’s a continuation of a word, Google seems to be wrong a lot, so hopefully this is overall better. (no change for asian languages) (Cloudstrifeff7)

- BUGFIX: Alt-tabbing and opening UGT’s main menu no longer causes it to forget the last custom rect scan area set (Cloudstrifeff7)

11/07/2021 – V0.74 Beta

- BUGFIX: Fixed ugly bug with the hotkey system creating non-functional duplicates, not sure if it broke anything big but it can’t be good

- BUGFIX: Changed timing so it doesn’t sometimes cause weird laggy behavior in Windows 11, probably helps with earlier versions too

- Now gives a nice message if a hotkey can’t be registered with instructions on why and how to fix it (Cloudstrifeff7)

(Older history moved here, was getting outrageously long)

Download

NOTE FOR UPGRADING: It’s recommended to start with the config_template.txt again, just copy over your google API key and rename it config.txt again.

Download Universal Game Translator for Windows (64-bit) (Codesigned by Robinson Technologies)

Get an error about DXINPUT_1.dll missing? Please install The Microsoft DirectX® End-User Runtime

This is an open source project on Github

Conclusion and the future

Some possible upgrades:

- A built in Kanji lookup also might be nice, Jim Breen’s dictionary data could work for this.

- My first tests used Tesseract to do the OCR locally, but without additional dataset training it appeared to not work so hot out of the box compared to results from Google’s Cloud Vision. (They use a modified Tesseract? Not sure) It might be a nice option for those who want to cut down on bandwidth usage or reliance on Google. Although the translations themselves would still be an issue…

I like the idea of old untranslated games being playable in any language, in fact, I went looking for famous non-Japanese games that have never had an English translation and really had a hard time finding any, especially on console. If anyone knows of any I could test with, please let me know.

Also, even though my needs focus on Japanese->English, keep in mind this also works to translate English (or 36 other languages that Google supports OCR with) to over 100 target languages.

Test showing English being translated to many other languages in an awesome game called Growtopia