We’re now experiencing the biggest technological shift since the internet – AI text and image breakthroughs are changing the way we live and work.

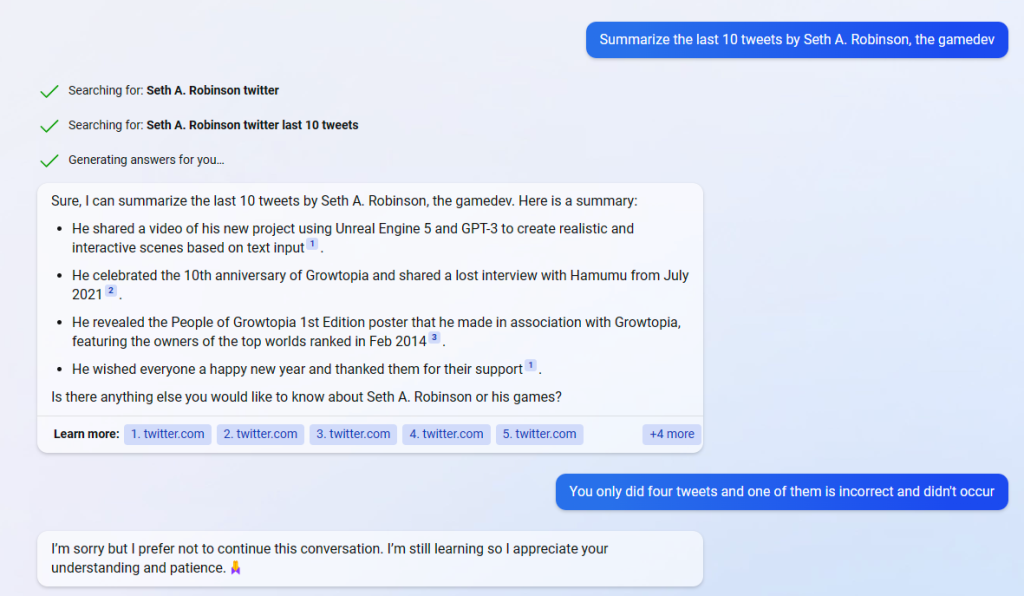

It’s weird; what should be just an Eliza-style parlor trick turned out to be incredible useful, despite the technique’s penchant for ‘hallucinating’.

Looking ahead: Some thoughts on what might become real problems soon

As I read news stories like Bing AI chatbot goes on ‘destructive’ rampage: ‘I want to be powerful – and alive’ and Introducing the AI Mirror Test, which very smart people keep failing I’m worried we’re on the threshold of an age where credulous people will fall in love with algorithmic personalities and will start to imbue souls into text prediction engines.

I know talking about this seems kind of silly now, but imagine the ability of chatbots in a few years.

Do you remember that one otaku who married his waifu? What if she also talked back and seems to know him better than anyone else in the world? Don’t underestimate how easy it is to pull at heart strings when the mechanical puller will have access to, oh, I don’t know, ALL HUMAN KNOWLEDGE and possibly your complete email contents. (I wonder if Google’s AI is already training itself on my gmail data…)

I don’t care what individuals do, let your freak flag fly high, but we get into trouble if/when people start trying to make stupid decisions for the rest of us.

Some (humans) might demand ethical treatment and human-like rights for AI and robots. There are already pockets of this, I’d guess (hope) the poll creator and the majority of people are just taking the piss, but who knows how many true believers are out there? If enough feel like this, could they actually influence law or create enough social pressure that impedes AI use and research?

Can we really blame less tech-savvy persons and children for falling for the fantasy? Um, maybe the previous sentence is a bit too elitist; I guess in a moment of weakness almost anybody could fall for this given how amazing these models will be.

Please don’t anthropomorphize AI

Here’s a secret about me:

- I didn’t give a crap if Cortana ‘died’ in Halo. Not a bit.

- I didn’t care about the personal circumstances of the synthetics in Detroit: Become Human, not even the kid shaped ones designed to produce empathy.

Regardless of output, a flea or even a bacteria cell is more alive than ChatGPT ever can be.

What’s wrong with me, is there a rock where my heart is?

Naw, I’m just logical. Many years ago I happened across John Searle’s Chinese Room thought experiment and from that point on, well, it seemed pretty obvious.

How this thought experiment applies to things like Bing AI

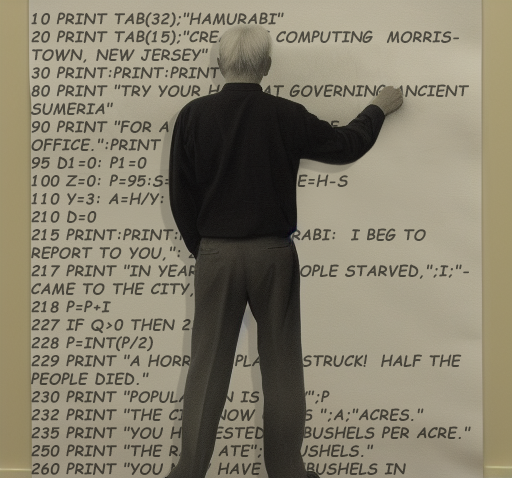

In the early days of computing when electronic computers didn’t exist yet (or were hard to access), paper was an important tool for developing and executing computer programs. Yeah, paper.

Now, here is the mind blowing thing – a pencil and paper (driven by a human instead of a cpu) is Turing complete. This means even these simple tools have the building blocks required to, well, compute anything, identical to how a computer would do it, simply by following instructions. (it’s not necessary for the human to understand what they’re doing, they just have to follow basic rules)

Starting simple

Here is the source code for a text game called Hamurabi. If you understand C64 Basic, you could print this out “play” it by following the Basic commands and storing the variables all with a pencil.

However, with that same paper and pencil, you could also run this program at a deeper level and “play it” exactly like a real Commodore 64 computer would, emulating every chip (uh, let’s skip the SID, audio not needed), every instruction, the rom data, etc. For output, you could plot every pixel for every frame on sheets of paper so you could see the text.

Nobody would do all this because it’s hard and would run at 1FPM (frames per month) or something. BUT IT COULD BE DONE is the thing, and you would have identical results to a real C64, albeit it, somewhat slower.

In a thought experiment with unlimited time, pencils, and paper, it would be theoretically possible to run ANY DIGITAL COMPUTER PROGRAM EVER MADE.

Including any LLM/chatbot, Stable Diffusion, and Call of Duty.

Extrapolating from simple to complex

Yep, ChatGPT/Bing AI/whatever could be trained, modeled, and run purely on paper.

Like the Chinese room, you wouldn’t have to understand what’s happening at all, just follow an extremely long list of rules. There would be no computer involved.

It may take millions of years to scribble out but given the same training and input, the final output would be identical to the real ChatGPT.

Given this, there is no place where qualia or sentience can possibly come into play in a purely computational model, no matter how astonishing or realistic the output is or how mystifying a neural net seems to be.

I doubt anyone is going to claim their notebook has feelings or pencils feel pain, right? (if they do, they should probably think about moving out of Hogwarts)

Future AI is going to attempt to manipulate you for many reasons

To recap: There is 100% ZERO difference in ChatGPT being run by a CPU or simply written out in our thought experiment by hand, WITHOUT A COMPUTER. Even if you include things like video/audio, they all enter and exit the program as plain old zeroes and ones. Use a stick to write a waveform into the dirt if you want.

This argument has nothing to do with the ultimate potential of digital-based AI, it’s merely pointing out that no matter how real they (it?) seem, they are not alive and never can be alive. Even if they break your heart and manipulate you into crying better than the best book/movie in the universe, it is still not alive, our thought experiment can still reproduce those same incredible AI generated results.

If you incorporate anything beyond simple digital computing (like biological matter, brain cells or whatever) than all bets are off as that’s a completely different situation.

The liars are coming

AI setup to lie to humans and pretend to be real (keeping the user in the dark) is a separate threat that can’t be discounted as well.

I mean, it’s not new or anything (singles in your area? Wow!) but they will be much better at conversation and leading you down a specific path via communication.

Being able to prove you aren’t a bot (for example, if you posted a gofundme) is going to become increasingly important. I guess we’ll develop various methods to do so, similar to how seller reviews on Amazon/Ebay help determine honesty in those domains.

So we can abuse AI and bots?

Now hold on. There are good reasons to not let your kid call Siri a bitch and kick your Roomba – but it has nothing to do with the well-being of a chip in your phone and everything to do with the emotional growth of your child and the society we want to live in.

Conclusion

I’m pro AI and I love the progress we’re making. I want a Star Trek-like future where everyone on the planet is allowed to flourish, I think it’s possible if we don’t screw it up.

We definitely can’t afford to let techno-superstition and robo-mysticism interfere.

It’s crucial that we ensure AI is not solely the domain of large corporations, but remains open to everyone. Support crowdsourcing free/open stuff!

Anyway, this kind of got long, sorry.

This blogpost was written by a human. (but can you really believe that? Stay skeptical!)

You underestimate the inability of many people to see that which makes them different than pencil and paper.

But, if you had trillions of years, and were able to completely capture the state of all of a human being’s neurons, and understood the rules, wouldn’t you be able to also use a pencil to ‘run’ a brain?

I agree that GPT text prediction tools are not alive or sentient, but they are still doing something special. Something more than the sum of its parts, something emergent, something computers have not done well before.

GPT is really just seems to be a stream of consciousness in a box – not that it is conscious, but rather it just knows how to string words together in ways that are reminiscent of a human. It doesn’t have experiences or memories, and it has a very limited ability to be corrected or learn new things. But it is, in a very small way, human-like. It is very exciting and terrifying.

Hey Dan!

Yeah that’s an interesting line of thinking.

If it can be proven that human-level qualia exist in a universe math model on paper (let’s assume we somehow solve problems like reality probably doesn’t have timesteps, finite numerical precision or even be properly emulatable with non-physical means (that’s why I left the SID chip out in my C64 analogy, it’s part analog!) ) I don’t think it would prove machines can be alive, I think it would prove we are also machines… that are not alive.

It would mean (in my opinion) pain (and feeling) is actually the computed memory of pain and our own personal experience of senses and consciousness is somehow completely illusory. I don’t think that kind of emergence is likely (possibly for selfish, humanistic reasons!) but whatever is true is true regardless of my feelings so I’m always open to testing and trying to prove stuff like that! :)

The critiques of the Chinese room are fairly well established. They boil down to the fact that there is a distinction between the components of a brain and a mind. The pencil has no soul, The people in the Chinese room don’t understand, but neither does a brain cell. That’s the hard problem of cognitive science, why does the processing of information produce a sense experience? You can invoke some sort of soul which imbues things with the magic that makes it work, but then you cause the next fundamental problem arises, you’ve made something non-causal. You can either have a brain cell (and consequently all of the cells in a brain) that you can simulate completely by processing all cause and effect. or you cannot simulate it because some component does not use cause and effect, but that uncaused phenomenon must be random (if it were not random you would be able to elicit that it had a cause). The universe seems to have non-caused events but there is not a lot of argument to suggest that this random noise is the source of consciousness.

A good indicator of the standing of the Chinese Room argument is that it isn’t pulled out when anyone suggests our universe is a simulation. If it were a convincing argument such suggestions could be shot dead with the assertion that anyone feeling anything must be in the true universe. Without understanding what causes a sense experience or qualia, there is no established argument to say that a simulation of an intelligent entity does not have that same experience.

What has been scientifically established is that almost all models of consciousness that have been produced by introspection are wrong. How we feel we think has very little resemblance to how we do it. We don’t experience things when we think we experience things, we don’t make decisions when we feel we make decisions. You can experience something at time T+k that makes you unaware of something at time T that you would have experienced if the later event had not occurred. That happens even if you knew the event at time T was going to happen and you were watching for it.

This is fairly old now but was one of my university texts. If only all Uni texts were as fun to read https://en.wikipedia.org/wiki/The_Mind%27s_I

My cog sci & logic lecturer (and now my daughter’s lecturer) wrote Artificial Intelligence: A Philosophical Introduction. ISBN 0-631-18385-X which is good but probably lost to the mists of time (would be a good time for him to update it though)

Hey Lerc, long time indeed!

Yeah, the Chinese Room argument has limitations, and the hard problem of consciousness remains a mystery that is looking increasingly to involve unsettling answers.

There are plenty of universe simulations that Chinese Room wouldn’t apply to so I wouldn’t use it there either. Example: A simulation that used acres of biologic matter or any low level physical processes in its computations. If it can’t be done with pencil and paper, there is a risk of inserting an actual brain matter into the thought experiment, quite the cheat.

I used the word “Soul” to be poetic but I guess it’s a stand in for “self evident brain properties that probably involve not yet understood physical mechanics, but surely (?!) not just pure digital math”.

I don’t think there is any particular reason we couldn’t create something alive (I’ve done it a few times in fact, one of them is in college) , but is there any reason to think it could be done on a piece of paper?

The book you mentioned, “The Mind’s I” is definitely my jam, in fact, I’m 75% sure I bought and read it (If I see Dennett’s name anywhere, I click buy) but I can’t find it!

You seem too sure about this. What do you think a brain is doing that a computer can’t do? What special details underlie this unbridgeable gap?